How does ChatGPT work: An in-depth look for Programmers

A bit about what's going on behind the scenes, in case you probably don't know yet

Hello beautiful programmers!

Welcome to the Programming and Doodles blog, today, we’re going to talk about How OpenAI’s ChatGPT works. Yes, the GPT that helps you with everything and still gets yelled at.

Here’s the catch though: we are not going to talk about how AI/ML models work in general. Everyone in programming or tech knows how they basically work— training using a sample dataset and then producing outputs based on analyzed data.

We are going to specifically talk about how OpenAI has built its ChatGPT model, the most used chatbot as of December 2024. We are going to look at its software architecture, the use of advanced transformer networks, and how Reinforcement Learning from Human Feedback (RLHF) fine-tunes the model for conversational tasks. We'll also examine the integration of scalability and real-time updates to ensure accuracy and context-awareness in responses.

And a quick twist: this is not written by ChatGPT (despite Quillbot’s accusation). Scroll down and see for yourself!

In a glance,

ChatGPT, powered by OpenAI’s latest model, GPT-4, is a “sophisticated“ deep learning model based on the Transformer architecture, which is at the heart of modern Natural Language Processing (or, as we call it, NLP).

What’s explained in the next sections is how ChatGPT takes your input and produces outputs, from breaking down text into tokens, understanding context with transformer architecture, generating predictions step by step, and refining outputs.

Let me break it down.

1. The Transformer Architecture

Are you old enough to remember RNNs, which stands for Recurrent Neural Networks? Then I’m sure you know that it’s this “Transformer Model“ that basically replaced them in 2017.

If you’re wondering how the Transformer model works, think like this: Imagine reading a book where we can see every word on the page at the same time. That’s what the transformer does: it looks at all the words at once rather than word-by-word. This process improves both speed and understanding.

These transformers utilize self-attention, which helps the model focus on the most important parts of a certain sentence while ignoring irrelevant information (which is also the reason why you need to repeat some parts of the command while working with ChatGPT).

After this attention layer identifies the relevant words, a feedforward network polishes the output by applying learned weights (i.e., trained parameters, which stand for numerical values adjusted during the model’s optimization process). This combination helps ChatGPT deliver smart and quick responses— most of the time, actually.

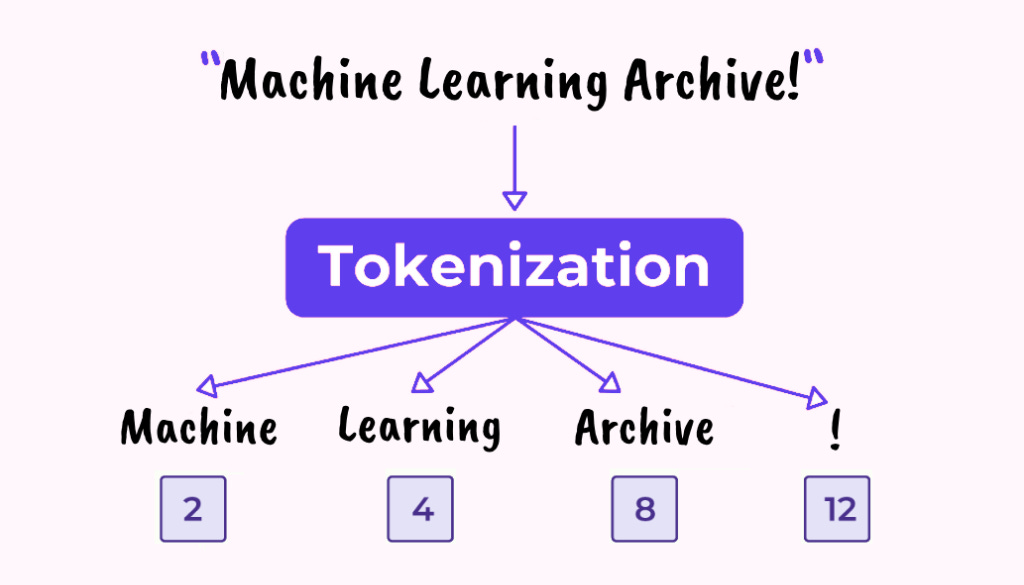

2. Tokenization

Whenever you enter an input text into ChatGPT, the first thing it does is break it into small units, which we call “Tokens“. This is done through a method like Byte Pair Encoding (or BPE). Thereafter, these tokens are converted into vectors (numerical representations) that machines can understand. And that’s what we call Tokenization.

For example, if you type hello world, the model breaks it down to tokens like “Hello“ and “world“, creating vectors for them. During the training phase, these vectors are fine-tuned to capture the relationships between those words, which is explained in the next section.

3. Training

As you might already know, GPT-4 is trained on large amounts of text, from books, websites, research papers, and other resources. It has learned language rules as well as patterns by reading millions of sentences from those sources.

This is very similar to how children learn to speak through exposure to conversations and reading— only a bit easier and expensive.

Let’s not talk much about training here; there are huge amounts of resources explaining how ChatGPT is trained, and here’s one video on YouTube that simplifies the thing:

Oh, but this is important: for the optimization of the modal, something called “backpropagation“ is used; In simplest words, backpropagation is basically a fancy word for reverse feedback. The system itself checks how far off its “guess“ was (we call it a “loss“ value in Machine learning), works backward through its calculations, and adjusts itself to do better next time.

Yup, ChatGPT is such a self-motivated and self-driven individual!

4. Response Generation

When we ask ChatGPT a question, it usually generates one word at a time. Sure, we can see its beautiful front end making it look like it’s basically messaging with you like a real-time person, but that’s not what’s really happening.

It’s also similar to the game of “20 Questions“, where you gradually build the answer based on each previous guess.

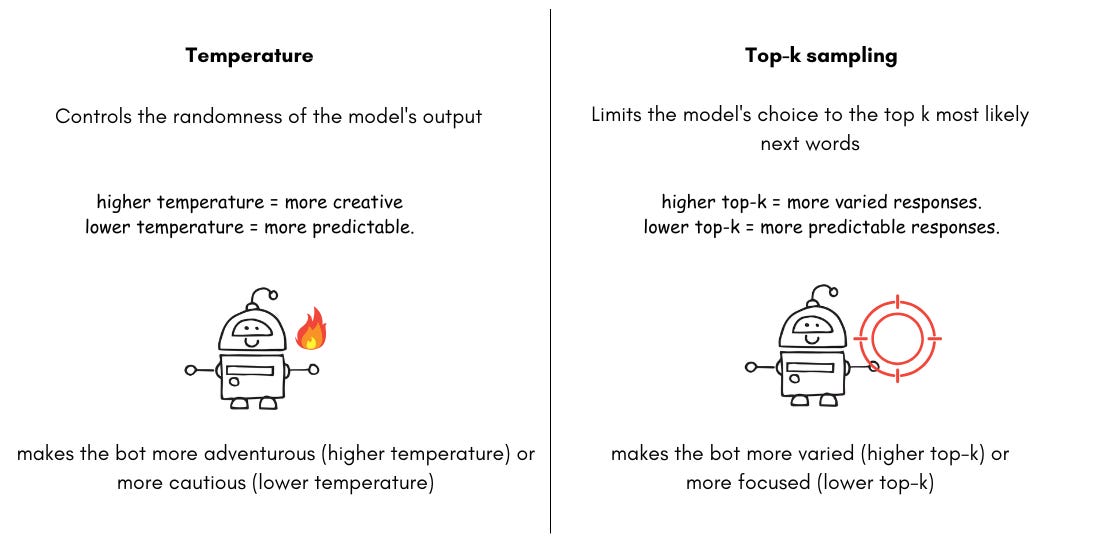

But also note that ChatGPT uses different techniques (or should we call them tricks?) to make responses more varied and accurate— like temperature and top-k sampling.

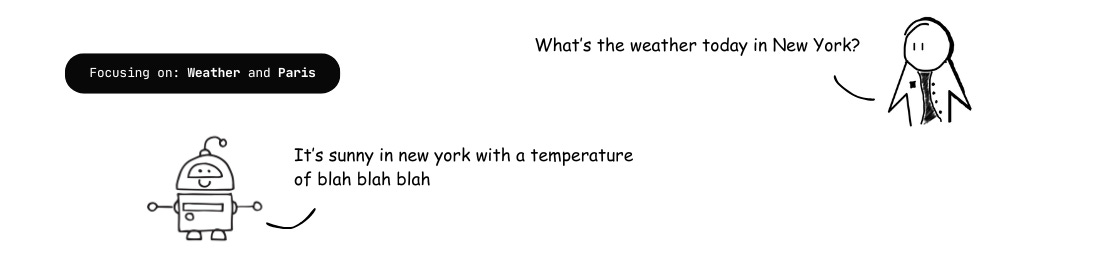

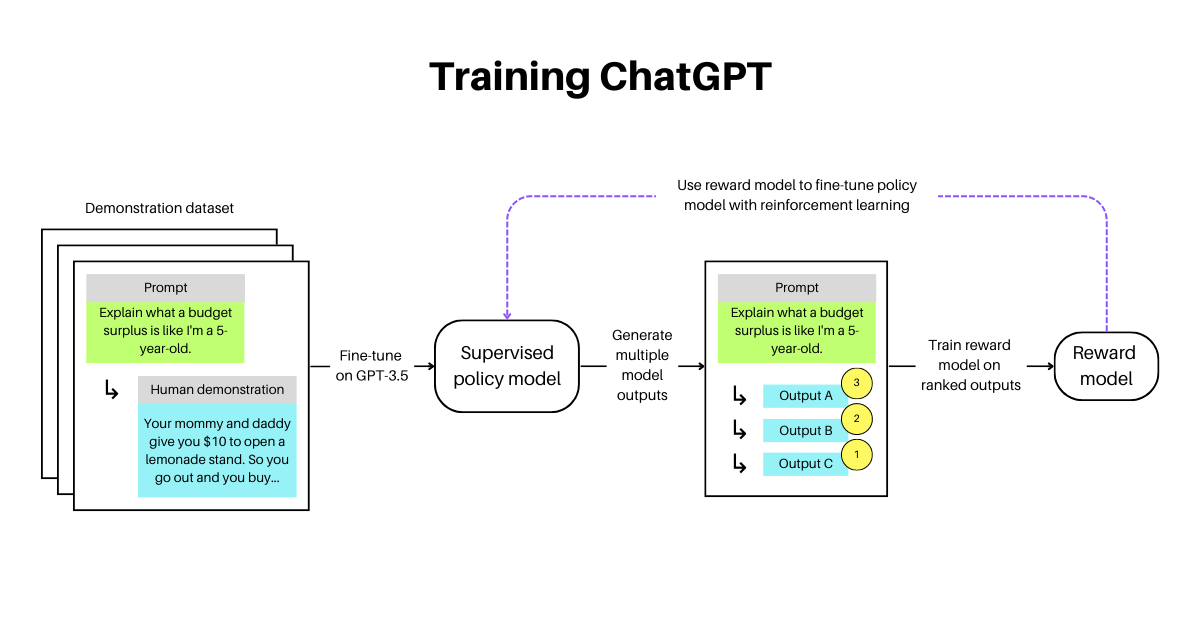

5. Reinforcement Learning from Human Feedback (RLHF)

When it comes to fine-tuning ChatGPT, RLHF is an important process. As the name suggests, it’s a process that refines ChatGPT’s responses by using feedback from human evaluators. Of course, ChatGPT is already capable of generating responses by itself, but it requires fine-tuning to ensure its responses are contextually relevant.

Just imagine: after all this fine-tuning, it still provides contextually irrelevant info sometimes. If there was no fine-tuning at all, ChatGPT would go into chaos.

Jokes aside, the overall purpose of RLHF is to help ChatGPT become more aligned with user expectations and thereby result in better and more accurate interactions.

And that should be all. Hope I covered everything! Oh, wait a minute, why don’t I ask ChatGPT for a review of this article?

Wrapping Up

You might feel like this article was too fast-moving, quick, and small. But that’s mostly because it was intended for programmers! What I wanted to do here is to provide lesser-known details and facts about the ChatGPT, not the things everybody knows about. That’s why it has much content in some areas and less content in some sections.

But if we still have a hard time understanding what’s going on behind the code in ChatGPT, please shoot me an email or connect with me on my LinkedIn.

If you loved this article, make sure to subscribe using your email, so you can read all my content inside your inbox without missing any!

Finally, an explanation even I can understand. Great job!